Ad Astra Per Present Tense Dishonesty

Blue Origin finally launched and landed New Glenn this year. It took them long enough (they were founded in 2000!) but they did spent a while sending celebrities and the obscenely wealthy to just above the Kármán line for a few minutes at a time. NG-2's booster had only been back on land for about two days before Blue Origin announced their plans for both uprated first stage engines (about 15% more thrust) and a new "9x4" variant of New Glenn with more engines and stretched tanks.

But the way they described the design for the "9x4" bugs me to no end:

The next chapter in New Glenn’s roadmap is a new super-heavy class rocket. Named after the number of engines on each stage, New Glenn 9x4, is designed for a subset of missions requiring additional capacity and performance. The vehicle carries over 70 metric tons to low-Earth orbit, over 14 metric tons direct to geosynchronous orbit, and over 20 metric tons to trans-lunar injection. Additionally, the 9x4 vehicle will feature a larger 8.7-meter fairing.

They used three different variants of tense and planning in that paragraph alone:

- "is designed for"

- "carries"

- "will feature"

As of when I'm writing this, NG-9x4 "carries" nothing. It is an idea, a rendering, maybe some pretty cool CAD drawings, and probably a few pieces of hardware. It does nothing. Yet.

It's not just Blue Origin. SpaceX (archive.org link) used similarly varied language when describing the "Falcon 9 Heavy" in 2007, which was three years before the first Falcon 9 launch and eleven years before the first Falcon Heavy launch (emphasis mine):

The Falcon 9 Heavy will be SpaceX’s entry into the heavy lift launch vehicle category. Capable of lifting over 28,000 kg to LEO, and over 12,000 kg to GTO, the Falcon 9 Heavy will compete with the largest commercial launchers now available. It consists of a standard Falcon 9 with two additional Falcon 9 first stages acting as liquid strap-on boosters. With the Falcon 9 first stage already designed to support the additional loads of this configuration and with common tanking and engines across both vehicles, development and operation of the Falcon 9 Heavy will be highly cost-effective. Initial architectural work has already begun, and we currently anticipate first availability of the Falcon 9 Heavy in 2010.

According to SpaceX at the time, it was both capable and would be capable. It both is and will be. (And wow, Elon time is rough.)

The folks over there at Rocket Lab seem much more judicious in their use of tense. Take this paragraph from their initial Neutron announcement:

Neutron will be the world’s first carbon composite large launch vehicle. Rocket Lab pioneered the use of carbon composite for orbital rockets with the Electron rocket, which has been delivering frequent and reliable access to space for government and commercial small satellites since 2018. Neutron’s structure will be comprised of a new, specially formulated carbon composite material that is lightweight, strong and can withstand the immense heat and forces of launch and re-entry again and again to enable frequent re-flight of the first stage. To enable rapid manufacturability, Neutron’s carbon composite structure will be made using an automated fiber placement system which can build meters of carbon rocket shell in minutes.

I bet their PR people hated writing "will be" so often, but it was true. But on that same page, they quote Peter Beck:

“Neutron is not a conventional rocket. It’s a new breed of launch vehicle with reliability, reusability and cost reduction is hard baked into the advanced design from day one. Neutron incorporates the best innovations of the past and marries them with cutting edge technology and materials to deliver a rocket for the future,” said Mr. Beck.

Aw, come on, Pete! It didn't incorporate anything, because wasn't a physical thing. Yet!

Some more highlights from the up-and-commers in the space business (again, emphasis mine):

Sierra Space describes their Dream Chaser:

Utilizing internally developed thrusters with three different thrust modes, Tenacity can nimbly maneuver in space and ensure deliveries are effectively completed. For the return flight, Dream Chaser can safely return critical cargo including supplies and science experiments to Earth at less than 1.5g’s on compatible commercial runways, making cargo accessible faster.

Astra's Rocket 4's payload user's guide (pdf)

Rocket 4 is an expendable, vertically-launched two stage LOX/kerosene rocket, optimized for reliability and manufacturability, and built to significantly reduce the cost of dedicated orbital launches.

Relativity Space says about their Terran R rocket:

Terran R is a two-stage, reusable rocket built for today's satellites and tomorrow's breakthroughs. Perfectly sized to serve the Low Earth Orbit (LEO) constellation market, Terran R will make access to space more reliable and routine.

Firefly describes their Eclipse rocket:

Eclipse is built upon the success of Firefly’s Alpha and Northrop Grumman’s Antares rocket with a significant leap in power, performance, and payload capacity. The launch vehicle utilizes scaled-up versions of Alpha’s patented tap-off cycle engines and carbon composite structures to reduce mass and lower costs while improving performance and reliability. Eclipse also retains the flight-proven avionics from the Antares program with additional upgrades, including a larger 5.4 meter payload fairing.

Look at all that present tense. Sierra at least has a flight article built, but one of its main jobs is to confirm whether or not all that stuff they say about it is true. Rocket 4, Terran R, and Eclipse are paper rockets as of now. Okay, there's probablly hardware in development, but certainly no fully-stacked rockets have been built. They currently do nothing. But read the marketing and you'd be forgiven for thinking that these things have been flying for years.

I sure am glad the culture of pharmaceuticals doesn't generally accept this sort of thing. Can you imagine? "Yes, we're just entering hit to lead, but our drug cures diabetes." That would be the pits.

NMR Tube Carriers

I really enjoy designing 3D models, and I really don't like filling out near-miss paperwork.

I've been designing and re-designing NMR tube carriers for literal years now, and I think I finally have a set that I like. Check them out on Printables.

At my last job, there was a strict rule about carrying NMR tubes around in mixed-use hallways. If you dropped one and it shattered, or it broke in your pocket, you'd have a potentially-flammable, potentially-toxic spill in an area where people don't wear gloves or goggles. It's a small risk, in the end, but no safety person ever got fired for suggesting an annoying fix to a minor safety concern. And they will scold you while you fill out a pile of paperwork.

I didn't like the carriers that work supplied – mostly that they only held one tube. So I designed and printed a carrier that held multiple tubes. That brought me into my favorite part of 3D printing: the loop of designing, printing, evaluating, and redesigning. It took a while, but I think they're ready.

At my current job, we have a group that routinely brings 15+ tubes down to the NMR lab at a time. So, I designed a huge one that carries 19 of them in a nice, space-efficient hex pattern. I could go up by one more 'ring' of tubes, but I think it would take half a kilo of filament to print. The single-tube carrier is probably what everyone needs. A 3-tube 'small' version is an upgrade. I use the 7-tube 'medium' version, but I'll never fill it up in one trip. And the enormous 19-tube carrier is enough even for the edge-case users.

It's really fun to build cool stuff and put it out into the world.

Five and a Half Years

Things have been going, on the whole, pretty well.

After getting laid off from my job in Indianapolis, I bid the Midwest a fond farewell to take a position out in the Los Angeles area. It was an adjustment, but things are okay out here. The wife and I might be the only people in the area watching Indy Eleven games from our couch, and I'm never going to accept the awful no-cold-snap corn they have here, and I'm going to miss being able to grab cheap seats ten minutes before kickoff and walk to a Colts game on a whim. And all of that, of course, is nothing compared to being so far away from friends again. I miss those people. (And, in one case, their baby!)

On the work front, though, things are going fantastically. I like to joke that I'm the head of process chemistry. Realistically, I'm just the first scale-up chemist the startup hired, but it's great to be The Expert in something like that in a team of medicinal chemists. And while I was icked out by the "AI"-ness of it all on their homepage at first, during interviews it became apparent that this is not a company where they just ask an LLM how to do chemistry – instead, they generate a bazillion data points with cool robots and combinitorial chemistry, then train ML models, and use that info to design another round of experiments. I don't really interact with that workflow directly, but it's fun to see from the sidelines. It's been about five months since I started and I've never felt so valued as an individual contributor and also given as much responsibility to manage big projects while also recognizing that I'm a real person. And my coworkers are awesome. It's a mostly younger-leaning team, and even the dude-iest AI dudebros love to dunk on the hucksters who claim their plaigarism machines can think.

About a week and a half ago, it appears that I brought something home from work that I had successfully avoided for more than half a decade.

Covid is not fun. My brain was scrambled eggs there for a couple of days. I'm not sure when I'm going to clear this cough, and I'll be working from home until I have consistent good days. Worst of all, I only had one test at first, and it came back negative early on – and so instead of being completely paranoid about locking myself in a room and avoiding giving it to the asthmatic wife, I passed the baton. So as I recover, I get to take care of her like she took care of me. It will have been a very unpleasant couple of weeks. I'm just relieved that my job's policy is "stay away until you are no longer sick, because duh, and we do not keep track of sick days." Beats the heck out of the policy my job had in 2020: "we make pharmaceuticals, so you are an expendable essential worker, and also we won't enforce any mask mandate, and also you get to work outside in the smoke and 110° heat doing 60+ hour weeks." Not that I'm still upset at that situation.

But I had really held a lot of pride that I never got it. I know it was somewhat luck of the draw, but I put my thumb on the scale where I could. I was the last one at my job in like 2022 still masking up all day, but once we were a few years into the vaccination campaigns I figured I was being overly cautious. I still (and can't imagine a future where I won't) put on an N95 for public transit, espeically flights. I had my appointment set up for my 2025-6 shot, although that's going to probalby have to be delayed. It was just really surreal seeing that second line on the test show up for the first time ever.

It seems like as the years go on and evolution does its thing, Covid continues to get less leathal and more contagious. In the long run, I was bound to get it eventually. Doesn't make it suck any less, though. And at the rate the current brain-worm-addled government is doing damage, I think the already-slim chances that we'll ever be done with Covid have been reduced to zero.

Mac Pro Studio Case

It's been a bummer of a week.

But in between job applications, I've finally had time to publish a 3D printing project I've been working on for a while now: the Mac Studio Pro Case.

I'm really proud of this thing. There were a few major challenges between "huh, maybe I could do this" and a physical object. First, the holes in the front were kind of weird to model, but I eventually got it. Then, my first few attempts involved one very large piece that surrounded almost the whole Mac Studio, which turned out to be way too unwieldy to print. Eventually I broke it down into small enough parts to print individually. But then, these things wanted to warp really badly. Glue stick helped, but the biggest parts actually lifted my PEI build surface off of its magnetic mount! I had to use some binder clips to hold everything together.

I'm not sure it will get widely printed – especially since I used some bolts from McMaster for assembly, which most people won't have. But I really like it, and it prompts a little bit of joy every time I look at it on my desk.

Now, to get back to those job applications.

Leaning on the Scales

The Indy Eleven season came to a close this afternoon. They lost 2-3 against Rhode Island FC in the USL quarterfinals at home. I was there, and although it's a huge bummer to lose and have the season end, it was made way more frustrating by the referees leaning on the scales.

Earlier this season, we faced Tampa Bay at home with this same referee crew, and we lost. The same thing happened. A really lopsided threshold on what constituted a foul for each side. A yellow card in a situation where I have video of the "offending" Eleven player not even touching the Tampa player. Et cetera. This ref crew has a thing against Indy, for some reason.

If Indy was going to win today, there were going to have to win by a lot for the final score to actually reflect that.

But then, this afternoon, it was the same story. Blown calls that heavily favored RIFC. Nonsense yellow cards against Indy. A goal that was taken off the books due to supposed offsides that was immediately contradicted by the video replay. Tons of flops by the other team that killed time that didn't seem to be reflected in the extra time at the end. The final score was 2-3, but in any reasonably fair contest it would have gone to PK's.

Winning today would have required an overwhelming win in order to overcome an inherently unfair situation.

Anyway, the election is on Tuesday. It's hard not to see parallels.

Edit, 2024-11-06: well, crap.

Something Funky is Happening in Stockholm

The 2024 Nobel Prizes were announced a few weeks back. I think the folks who decide who becomes a laureate need to have their heads checked.

I have gripes about the chemistry prize. But I usually do. By my count, of the 20 prizes awarded since I took my first chemistry course as a sophomore in high school, 11 have been for something other than chemistry. That thing is biology.

Okay, so maybe click chemistry is chemistry. Even though the real innovation was being able to attach stuff to DNA or whatever in cells. But conservatively, 50+% of the Nobel Prizes in Chemistry in the last 20 years have gone to people doing biology, analytical techniques for biology, or chemical tools specifically for use in biology. And hey, guess what. There’s a prize for that: Physiology or Medicine. Which, since 2005, has gone to biologists doing work in things like RNA and protein interactions and stuff several times.

But the physics prize this year takes the cake. They gave it to the AI guys.

Not particles, or waves, or stars and planets, or materials. Nope. They gave it to the guys who invented algorithms that led to ways of turning massive copyright theft into energy guzzling slop factories. You can generate a lewd image of your favorite anime character with improbable anatomy. You can generate paragraphs of text to email to everyone in your department that nobody will read. You can work on a way to do "full self driving" with cheap hardware, and someone randomly dies only once in a while. All it takes is putting artists out of work, putting carbon into the atmosphere like you’re trying to win a diesel truck idling contest, and sending GPU prices into the stratosphere.

Three years ago they gave it to the people modeling global warming. Now, they’re endorsing the guys saying climate change targets are unreachable because you have to burn a lot of energy to run a chat bot that lies.

When this sort of thing became available to the public, I played around with it for a bit. But it didn’t really make anything useful unless I let my Mac crank for an hour at full blast, and even then I had to pick one okay image out of a pile of slop. And then people I know started getting let go of their writing jobs. Some were offered new jobs, at lower wages, to edit the bot’s output. That put an end to any flirtation I had with the garbage factory.

This weekend, I got to review a paper for OPRD. The abstract, introduction section, and conclusions were (as far as I can tell) written entirely with LLM tools. Complete with misattributed citations and, in one instance, a citation to an article that did not even exist. I'm guessing it took me a lot longer to review that piece of crap than it took the LLM to generate it.

Pretty soon, it’s gonna be on my phone. iOS 18.1 dropped this week. Generating bad caricatures of my friends. Writing emails to people that won’t read them and instead autoreply with their own bot. Making new emojis, I guess. For what reason? Supposedly “everyone” knows it’s the new hotness. Nobody wants to be perceived as being behind. Even if they don’t understand it, they have to pretend to. Or at least be enthusiastic, so dumb guys with money will invest.

I think the folks in Copenhagen got caught up in the “don’t be left behind” hype cycle.

The prizes are a way to say “this is important.” For chemistry, usually that means it was invented a while ago and now plays an important role. Physics tends to be more recent. Physiology or Medicine varies, sometimes it’s older but often it’s more like Time appointing a Medicine of the Year. Literature is something I’ll pass on commenting on too much, but I know they gave it to Bob Dylan a few years back and I’ll always think that was hilarious.

So maybe the physics prize committee members thought well, this is our chance to confirm that this prize is meaningful. We can give it to the AI guys. Everyone knows it’s important. Maybe it’s not physics. Who cares?

The thing is, when you start applying enough energy to a biological system, it stops being biology and starts being physics. All the energy being poured into AI data centers is gonna result in carbon emissions that speed that process up for us. Maybe we’ll get blown away by hurricanes and tornadoes, flooded, or burned earlier than we otherwise would have. At least we had anime boobies to look at. And the unemployed time to look at them.

The Right Kind of Retraction

A real bummer of a retraction in JACS last week, but it's an example of the scientific process doing what it's supposed to do.

The researchers had found a cool optical effect where a particular absorption band in a dye molecule got redshifted when you squeeze it between two plates spaced very, very close together (like microns). This effect also seems to affect things like reaction rates and selectivity and other sorts of things that, if you wanted to, could result in some very fancy reactions. But we, as a community, don't really have a super good grasp of why what's happening is happening. Noticeing a redshift in that dye's absorption band led the researchers to suggeest that the oddball chemistry happening in those tiny spaces are due to through-space electronic interactions. (If I'm reading their paper correctly, that is. I'm no spectroscopist.)

But, then, about a year and a half later, the team had been moving forward with the next set of experiments, and found that the effect they had seen was due to an optical artifact. That artifact was also present in their control experiments, making it harder to catch. Because they couldn't differentiate between the effects of those reflections and the effect they had proposed, they retracted the article.

It's really never fun to have to issue a correction or a retraction. But it appears that this one was due to the scientific process doing what it's designed to do: discover, replicate, and extend. If you're a new grad student in a lab, it's pretty typical to join a project and start by replicating the results described in a previous paper from the group. As a grad student, it gets you familiar with the equipment and process while having a good metric of whether or not you're ready to start doing new stuff. But as a PI, it gets more eyeballs on results. In the worst case, a new student can't replicate a result because a former student fabricated it. But in this case, it appears as though fresh eyes found an artifact that had gone unnoticed until then, and the authors of the paper did the right thing by retracting it.

I don't read a lot of the hardcore spectroscopy literature. But if I did, after this retraction, I'd be willing to trust the expertise of the lead auther and his lab more, not less.

Introducing: Does the Elevator Work?

The elevator at the building I live in is broken a lot. To the point where residents on higher floors have negotiated with management to knock off a little rent from particularly bad months.

I thought it would be cool if the the residents could have a little status page where we update each other on whether or not the elevator is broken, plus see historical data on how bad the situation really is. One big Sunday of coding, plus a little bit on Monday and Tuesday, has resulted in this.

It's built with php and uses sqlite as the backing database. The visualizations have php programmatically outputing javascript which writes to an html canvas object. It's... disgusting. But I love it. And it works!

I had to learn a few things to get this thing made. Primarily reading and writing with databases, which php's pdo system makes pretty straightforward. But also doing password hashing and validation (so not just anyone can go in there and update things), and it was the first time I drew to an html canvas. And getting my server to let php actually talk to the database took some effort.

This is one of those projects where the journey was probably more satisfying than the destination. But early reviews from other residents have me hopeful. "Absolutely stellar," says one person in the group chat. "Priceless," says the cool older lady. "10/10" says the poor person who has to walk up ten flights of stairs every time the elevator breaks.

Now I just have to hope the building management either a) don't find it or b) don't really care.

TNG Season Three Was an Absolute Banger

I've been doing a re-watch of Star Trek: The Next Generation. It's a corny show, but it's also a great show. Season one had a lot of stinkers, though. Season two had bright spots. But they really hit their stride in season three:

Who Watches the Watchers: First Contact goes way, way off the rails, and the planet's inhabitants worship the captain as "The Picard," who only convinces them of his mortality by dying. But just for a little while.

Booby Trap: La Forge is not very good with non-holographic ladies.

The Vengeance Factor: A peaceful planet with a dark underbelly. Riker falls in love with a lady, and then shoots her.

The Hunted: Another peaceful planet with a dark underbelly. The Enterprise crew stage a revolution.

The High Ground: Wouldn't you know it, another planet dealing with rebels. This time, the rebels die. Except not from the thing Dr Crusher was trying to make them stop doing, but from guns. Also, they predict the Irish unification of 2024. Tick tock, emerald isle.

Deja Q: The best Q episode, hands down. There's a mariachi band and cigars.

Yesterday's Enterprise: It's almost a shame the season ends how it did, otherwise this would easily be its best episode. Tasha gets to go out in a blaze of glory instead of for no reason, which they directly reference in the episode.

The Offspring: Data kindly but sternly asks that Riker stay away from his daughter.

Captain's Holiday: Riker pulls the greatest prank in the history of Starfleet.

Hollow Pursuits: La Forge thanks Barclay for making everyone forget about his holodeck girlfriend issue.

The Most Toys: Even in the future, rich people are just the pits.

Sarek: Picard and Spock's dad swap brains, and we get to see what Patrick Stewart can really do.

The Best of Both Worlds: Better than most of the TNG movies.

Of course, there are some stinkers. Every season of Star Trek has stinkers. Troi's mom shows up. There are trade negotiations. There's a bad courtroom episode. And they established that every season had to end with a two-parter. But season three started the unambiguous good times of TNG.

The Lesson of Water-Glycol Chillers

Roughly every eighteen months, I have to re-learn this lesson. I'm gonna write it down this time. Maybe that'll help me remember.

Reactor chillers are really cool. You take a jacketed flask or reactor, hook it up, put some heat transfer fluid in the chiller, and turn it on. You can control the temperature of the jacket, and then the contents of the reactor, using the chiller. The fancy ones can do temperature programs and ramps and stuff if you're doing crystallization work. No oil baths, no dry ice, no heating mantles. They're awesome.

That heat transfer fluid, though. My favorite is syltherm, which is a polysiloxane material. You can get it really hot and really cold, at least in the range that organic chemistry is typically performed. But it burns, which isn't great. For very cold systems, like cryogenic condensers for vacuum distillations, one of my coworkers at my first process job introduced me to something really simple: ethanol. Very low viscosity compared to the other options when you're in cryogenic conditions. Watch out for the vapors, though. Again, the burning.

But the most commonly used heat transfer fluid is a water / glycol mixture. I've seen both 50:50 water : ethylene glycol and : propylene glycol as pretty common options. You can get them reasonably warm and reasonably cold. It's about as cheap as you can get for something like this. No big toxicity issues. And with all that water, flammability shouldn't be a problem.

But.

You have to use your brain. When it's heated up, or sits there in the chiller for a while, that water has an appreciable vapor pressure. But the glycol doesn't. So one day, you open up the tank and notice it's low. You grab your pre-made bottle of 50:50 and dump some in. Congratulations, your 50:50 mix is now like 40:60 water : glycol. Next time, it's 30:70. And soon, because it doesn't take much, you have a very viscous mixture that stops flowing very well. Your pump says it's at -5 °C, but the fluid isn't making it to your condenser fast enough.

And if you're me, today, you suddenly remember all of this when the DCM you're trying to distill starts to fly right past your condenser.

Sigh. This time I'll remember. Certainly. Just add water to the chiller.

My Work PC's CPU Cycle Budget

- Outlook 6%

- Teams 8%

- Chemdraw 2%

- Corporate IT background software 80%

- Excel 4%

someone who is good at the economy please help me budget this. my work PC is dying

Wait, That's Gary Oldman?

I think the first movie I saw Gary Oldman in was Batman Begins. Not long after, I was a teenager and dodged my parents’ prohibition on the Harry Potter franchise (they were right, eventually, but for very different reasons of course) I saw him as Sirius Black and had no idea that it was the same guy. And when someone got me to watch this weird nineties sci-fi movie about elements and love or something, I again didn’t recognize him as the antagonist until way later. I’m currently into the second season of Slow Horses, and it’s more of the same – wait, that’s Gary Oldman? He’s amazing!

There are actors who are good, but you can never forget who you’re watching. I’m not talking about the folks who sort of always play the same types of characters due to typecasting or whatever, or actors in comedies or action movies where there’s less breadth in characterization. It’s more that for most actors, their star power overshadows their role.

Like, say, Jeff Goldbloom. I love me some Jeff Goldbloom, and he is a suave eccentric gentleman, but it’s always Jeff Goldbloom up there. Tom Hanks is always Tom Hanks, and a lot of his movies would have done very poorly without his genuine likability. Al Pacino’s great but he’s always Al Pacino. Meryl Streep, Helena Bonham Carter, and Angelina Jolie prove it’s not just an issue with guys. John Lithgow. David Tennant and Matt Smith, as heretical as it is for a nerd to criticize a Doctor. They’re fine, or great, or astounding actors. But you never forget who you’re watching. They don’t disappear.

There’s the method actor type, the canonical example of which is Daniel Day-Lewis. A guy who supposedly goes for it so hard it’s annoying to those on set, and does two movies a decade but they’re Very Important Movies that film students will study and I will not find very fun to watch. Marlon Brando method acted too, and was obviously amazing. But he shared the same problem as Day-Lewis: even though they lived as their characters, and produced amazing performances, I can’t avoid seeing the actors first and the characters second. They lived their roles, but didn’t disappear into them.

I think Gary Oldman is the greatest actor working today. He inhabits his roles to the point where you look past the actor and just see the character. As if you’re not watching TV or a movie, and you’re reading a book instead. There are others – Karl Urban has made me do similar double-takes. But nobody does it like Gary Oldman.

The App Store is the Worst Part of Apple

Just got an email from the Apple Developer program detailing a few updates to their review guidelines. And hey, it sounds like good news: in the US, developers can accept payments outside of the in-app purchase situation that's been a requirement for years. Progress! Right?

Except.

There had to be some catches. This was a result of a court order, so clearly they're not doing this willingly. And although it's now technically possible to take a credit card payment in your app that avoids giving Apple 30% of your money, it's functionally impractical-to-impossible.

You have to:

- Still offer in-app purchases through their system (for their 30%)

- Request and be issued an entitlement, that you need to implement in Xcode

- Provide exactly one URL that cannot change without re-review

- Format the button to press in a way that makes it not at all look like a button

- Use a specific icon at a specific size as part of the button's text

- Accept that Apple will throw up a very scary wall of text saying you're probably about to get scammed despite having checked that the URL is safe during review

- Accept that Apple will now "only" take 27% of your money instead of 30%

Failure to do any of these things to their exacting specification will get you rejected. There are some weasel words in the guidelines, like how the link out icon size "must visually match the size of the text," which could mean anything to anyone and will definitely result in rejections. And you still have to provide Apple with a report of all your earnings each month so they know you're giving them their 27%.

This all matches what happened in the Netherlands with dating apps, where Apple decided that if they were to comply with these court orders, they'd do so in a way that made it as painful as possible for both developer and consumer.

The App Store is the worst part of Apple.

The deal with Apple, for a long time, was easy to understand. You'd buy a Thing, and that Thing would be different in some way from the things it was competing with on the market in a way that made it desirable. It was easier to use, or looked nicer on your desk, or made you look cool to use it, or had a nicer way of interacting with it, or had software that was nice to use. You'd pay more for it than the other things on the market, because it had those benefits.

But then, the iPhone got huge. All the software that got created for it was a big part of that. But Apple couldn't see that software as a benefit to the ecosystem, despite the ads. Infamously, the guy running things saw any money being made in the ecosystem other than by Apple itself as a parasitic situation. Every dollar earned by some parasite on the system, like, say, developers, would have to be fought for.

That attitude stuck with the App Store leadership, it seems. Rather than accept the situation for what it is – that only having one software vendor for a platform is unacceptable – the folks running the App Store have decided to make the march to independence from the App Store as poisonous a process as possible. They'd rather make the experience of developing for and using the product terrible than give up 30% of ninety-nine cents.

It's gross, and it's not going to stop until a judge decides that this kind of crap isn't good enough. Or maybe the right couple of old guys retire.

Inkblot Extraterrestrials

Aliens are in the news.

It's not surprising. A little less than half of the electorate of the US has decided that conspiracy theory as a form of government is just fine with them, so why not? It's all fun to laugh at, until it isn't.

But I think the thing with aliens is really interesting. Whether or not you believe that there are aliens out there right now buzzing through our skies says a lot about you as a person. There are some obvious things – a lack of trust in the government, disbelief that non-Europeans could have built big things, not understanding technology so it must have been reverse-engineered – that sort of stuff.

But a belief that aliens exist and are among us is also a weird kind of optimism.

"But where is everybody?" is (allegedly) how Fermi put it. If the universe is big, and life can arise in other places like it did here, and Earth isn't the first place to develop life, then someone should be out there. Little green men aren't walking around downtown, so their absence sort of demands an explanation. Maybe we're early in the life-bearing era of the universe. Maybe every intelligent species eventually nukes themselves into non-existance. Maybe they all develop giga-computers and stop caring about the universe as they plug into the Matrix. Maybe FTL isn't possible. Maybe it's hard to do space travel and also feed everyone on your planet. Or maybe we are unique, and the professor at my college was right, and his dubious math showing life couldn't actually arise anywhere did prove it had to be intelligent design, and the people who didn't get good grades because they disagreed were wrong. But I digress.

If there's other life in the universe, but there are no alien visitors to Earth, it's bad news for humans. That means somehow every species has gotten stranded on their homeworld, and it's probably for reasons that are kind of a bummer. So, to me, the people who advocate that yes actually the aliens are out there are profoundly hopeful. We can get through the Great Filter, because someone else already did. We'll eventually solve hunger, because how would you go out into the universe if you haven't solved scarcity? We'll eventually figure out how to avoid nuclear armageddon, because check it out, they did. FTL is possible – see, they're here. We won't all just lock ourselves into a VR-island-of-lotus-eaters, becuase they managed not to.

I don't think the aliens are here.

And besides the fact that we have no credible evidence for them, I just don't think anyone can get through that Great Filter. In 1945 the whole world figured out how easy it would be to fail that test, but really, you don't need nukes for that. The World Wars kind of stopped because of nukes, and without them, we'd probably have had another one in the 50s or 60s. A culling like that every twenty years would keep us firmly planted on this planet. But with nukes, we have to worry about The Last War, where those of us who aren't radioactive vapor get to pay for bullets with bottle caps and try not to get eaten by radroaches.

Maybe nukes aren't the Great Filter. It could be something else. It wouldn't have taken that many base pairs to be different and the 2020s could have been like the 1350s. As long as the aforementioned conspiracy theorists keep getting elected, I'm not sure that won't be a problem going forward. That'll definitely keep us focused on continuing to breathe, not going to alpha centauri.

I mentioned the whole voluntary-extinction-through-Matrix thing because that's less overtly horrible than the rest of them, and (given it would be possible) I can totally imagine us doing that. Surveillance Capitalism is a lot easier when your whole mind has cookie tracking on it. Or maybe it's boring, and we just exhaust the resources of the earth as we create a lot of value for shareholders. Not every Great Filter has to be violent.

My guess? We'll eventually get caught in a Great Filter, just like everyone else, and that's why there are no aliens, and it's probably because of a thing we don't even know about yet. It's pessimistic, I know. I'd like to be proven wrong. But the types of folks that just insist that they exist don't give me a lot of confidence.

Discovery with a Computer Isn’t Discovery

I've talked about this sort of thing before, but here we go again.

Computational models in chemistry are cool and useful. They predict and explain things in a way that's difficult or impossible to do at the bench. But I take issue with the title of this recent paper in JACS: "Computational Discovery of Stable Metal–Organic Frameworks for Methane-to-Methanol Catalysis" (emphasis mine). The authors have done no such thing.

This paper describes a workflow where a database of MOFs is mined for this-and-that feature, and computational methods are used to predict which ones would be good catalysts. Some of them are probably good catalysts, according to their DFT models. Discovery!

Except they didn't actually do anything. There are no turnover numbers or yields or anything like that becausd they didn't run any reactions. They didn't find a collaborator to run any reactions. They suggest in the manuscript that, well, someone should try these things. But it seems like they consider the matter closed because their models say it should work. The folks at JACS agree, I guess.

That isn't a discovery. It's not even a result. It's a hypothesis. One that needs to be tested before anyone can claim a discovery.

It's especially frustrating to compare it to another paper in the same batch of ASAPs. In that one, the authors look at crystal structures and try to find something that will do the reaction they want. But of course, they designed a bunch and ran them to see which worked best. I'm sure their models told them which one would work best. But then they went and did the thing. Made them and tested them. Optimized them based on results. And in the end, isolated 35 mg at 93% yield, 99:1 dr, 3.5:96.5 er, and 5000 catalyst turnovers. Now that is some science.

Modeled, predicted results aren't results. They're hypotheses.

Attention Grabbing TOCs

Sometimes a header graphic comes up in the ASAPs and I have to shake my head a bit. Like earlier this week, when someone wrote out their N-dealkylation catalyst as (Ir[dF(CF3ppy]2(dtbpy))PF6 instead of just drawing the thing out. It's ChemDraw, not ChemWrite, you know.

But man, one that popped up today was great. High-energy compound chemists are something else. And apparently, their graphics concepts are hard to beat:

Striking.

Crossroads

It's good to be back in Indiana. New job, old friends. Living in the city rocks.

Getting to Indiana was bad.

No direct flights from San Jose, so we flew through Denver. A two and a half long layover would be rough, but it would give the cats a chance to stretch their legs in an animal relief room. And Denver isn't so bad as airports go. Decent food options, and it wouldn't be busy on a random Wednesday.

But wow, is Denver windy.

It was when we were circling for longer than usual that I started realizing there might be an issue. We actually made one attempt at a runway but pulled up while still a few thousand feet up. As it turned out, wind shear at ground level was grounding all flights out and preventing any flights from landing.

The captain, with an apologetic tone, told us we were running out of fuel and would divert to a nowheresville airport in Nebraska to refuel and wait for the wind to calm down. It took about two hours. By the time we made it back to Denver, our connecting flight to Indy had just left.

So now we have two nauseated cats, and one nauseated Lucas, from the turbulance. No flights to Indy until the next morning. And a customer service line that wound through the whole airport, since everyone else had missed their connections too. But I managed to get ahold of an agent using the fancy iMessage customer service thingy, and proposed an option to him: we flew to Chicago.

The in-laws let us stay at their place in the Chicagoland area for the night. Taylor drove all the way up from Indy and picked us up the next morning. Hailey let us borrow pillows and blankets. Taylor helped me retrieve our luggage, some of which had made it as far as Montana, that had eventually been delivered to the Indy airport a day later. Then he let us borrow some plates and bowls and stuff as we wait for our household stuff to be delivered. We were tired, but we made it.

We had a very bad time getting here. But that experience reminded me of one of the biggest reasons I wanted to be in Indy: the people. There are good people here. I've missed them very badly.

Feeling Dumb for a While

It's always tough to learn a new set of tools. It's especially tough to learn your second set of tools. The first was the only way to do it for a long time, after all.

Doing the full conversion of NMR Solvent Peaks from UIKit to SwiftUI was one recent example. It took me most of a summer just to get the flow right. Easy things became hard, and it was frustrating. I bounced off of it more than once and just figured I'd go back to UIKit. But I got the hang of it eventually, mostly by just trying to make the thing I wanted to make instead of a million sample projects and tutorials. Every time I hit a snag, I'd search through Stack Overflow or Hacking with Swift and get an answer to that particular issue. There were many such searches on the first day. There were fewer over time.

Eventually, those easy things that had become difficult with the new tools? They were easier than before. And problems I avoided because of the complexity? They were within reach now.

The same sort of thing is happening with me and 3D modeling. I've been using TinkerCAD for a long time to design things to 3D print. But it's pretty inelagant. Just about everything is a combination of basic shapes and their intersections. Now, I could build some pretty cool stuff with TinkerCAD. Two of which I'm proud enough of to publish on the internet, and it's really satisfying to see people print them for themselves. But there has always been a huge shadow hanging over all of these designs: what if I put on my big boy pants and used Fusion 360?

Well, I had tried Fusion 360 a few times already. Each time, I bounced off of it. Simple operations in TinkerCAD didn't seem possible in Fusion 360. And years of using TinkerCAD had trained my brain to think of 3D objects as constructions and combinations of simple shapes. So the entire design system of Fusion 360 was foreign to me.

But just like with SwiftUI, once I sat down and gave it a real try with a real project, it turns out that those operations were actually possible. And with a bit of time, easier than in TinkerCAD. I just had to rewire my brain a bit, and be willing to feel dumb for a while. But here's the best part – now, things are possible for me in Fusion 360 that were absolutely impractical in TinkerCAD. Pulling in faces a bit for fit tolerances required completely rebuilding a part in TinkerCAD. In Fusion, it's like four clicks. But then extending one face in a 3D trapezoid pattern to fit in a similar 3D trapezoid hole in another part? More or less impossible in TinkerCAD. In fusion, again, it's like four clicks.

Learning new tools stinks. It would be so much easier to just get my work done with the old tools. And I have to feel dumb, despite being good enough with the old tools that feeling dumb felt like a distant memory. But most of the time, once I figure out how to solve the old problems with new tools, I realize that there are new classes of problems I never even considered solving because the old tools couldn't handle them. It's not just about solving old problems faster, it's about rewiring my brain to find new ones to solve.

I just wish it didn't stink so much at first to feel dumb again.

A Necessary Addition to the Style Guide

Drawings of chemical structures are one of the most important ways (especially organic) chemists convey information. And while everyone has their style, there are certain conventions that are universal.

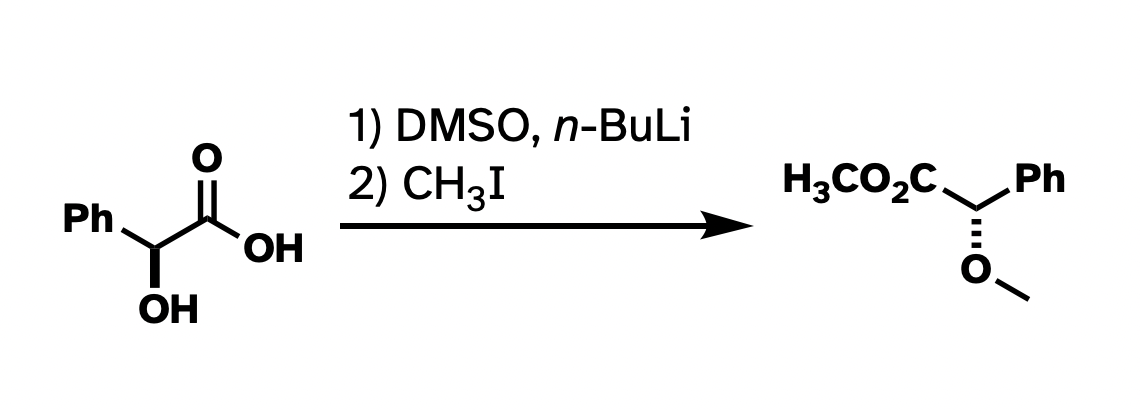

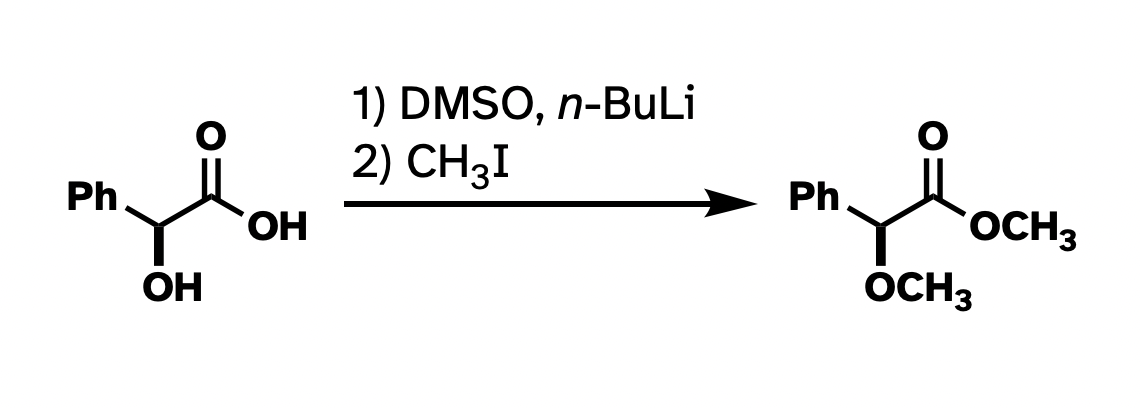

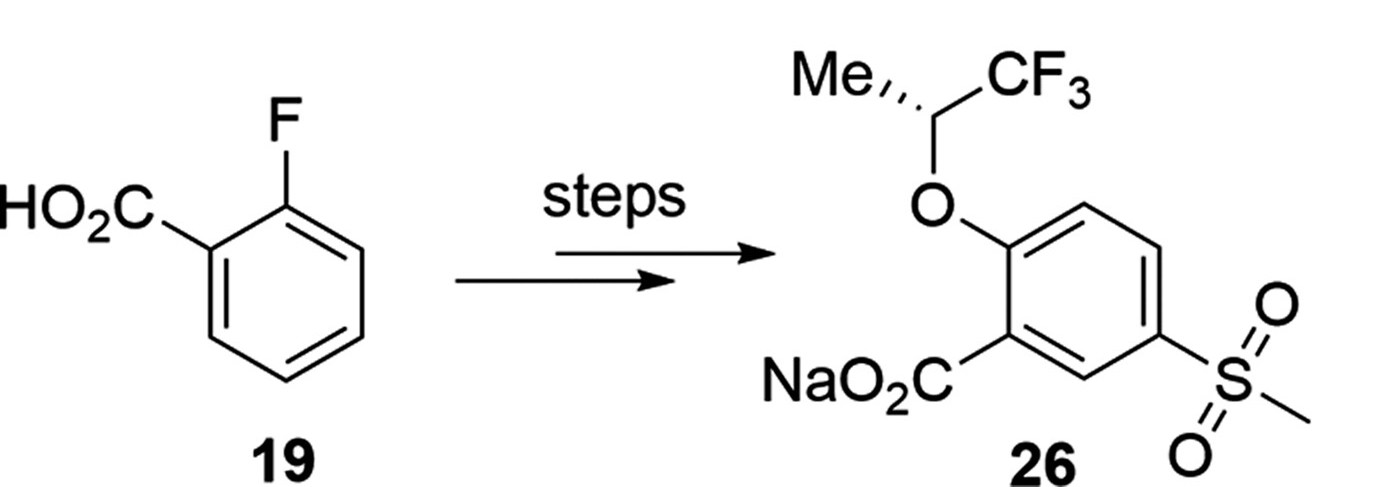

And while I think that nitpicking Chemdraws in presentations is not always constructive, when it comes to publishing, I think there is usefulness in having a style guide. At the very least, drawn structures that are consistent within a paper make taking in the information frictionless. Consistency within a single figure, I think, is even more important. Take this reaction that I ran a bunch of times in graduate school:

This drawing isn't technically wrong. But I've rotated the whole thing around. I've drawn methyl groups two different ways. I also abbreviated the carboxylate in the product but not in the starting material. It would be a lot more clear what's going on if I had "locked in" as much of the structure as possible so the most obvious things to stick out were the changes, and if the abbreviations were consistent.

Better, right?

This argument has little to do with the asthetics of the above structures. For instance, I really don't like "hanging stick" methyls, like on the ether in the first drawing. But many use it, and it's not really wrong. The actual problem here is clarity and consistency. There wasn't a reagent that looks like "–– I" in the starting materials, so why put it in the product? And there's one "H3C" in the product that works all right, so why not be consistent with the methyls? The point of these drawings is for the easy conveyance of information. I think the top drawing does not do a very good job at that.

This brings me to a paper that dropped in OPRD today. It's a good paper, with great chemistry, and I'm not even going to link to it because this criticism isn't for the authors – it's for everyone that draws structures and puts them on (virtual) paper. But look at this little slice of the header graphic:

If I were to re-draw this, I'd rotate the starting material 60° counterclockwise to show that the starting carboxylic acid hasn't changed (besides being made into a sodium salt). That change makes it immediately clear what's happening here – an SNAr followed by some sort of electrophilic sulfonation reaction. Also, they've abbreviated a methyl two different ways – as "Me" and as a hanging stick. Not so bad, really, but it feels like two different teams worked on both halves of this molecule.

Again, this isn't an argument about what looks nice. We can argue about what looks nice, and nice looking Chemdraws look different to different people. This is about clarity and ease of information transfer. Keep as much about your starting materials and products unchanged as is practical, and let the changes stand out.

App Store Conspiracies

I'm really not a fan of Apple's App Store policies. This is not a revolutionary statement. Ever since the App Store launched, the 30 percent don't-call-it-a-tax has felt pretty crappy to anyone developing on the platform. But all of that cash can't seem to fund decent App Store review infrastructure, with good apps rejected all the time for no reason and absolutely terrible apps making it through all the time. How can they be raking in billions of dollars, mostly from gambling apps for childeren, and not be able to pay people to properly filter out the garbage?

But, then (Christopher Atlan, via Michael Tsai):

My sources tell me Google has successfully inserted provocateur agents inside Apples App Review team. They are exceeding their goal to discourage indie devs, making these remarkable apps for the Apple platforms.

So this kind of conspiracy theory stuff is probably wrong. But it's believable, kind of. At least I want it to feel believable. I'd certainly prefer that the App Store to be a good place to distribute software. But it's not. As to why, it's easier to imagine that a few bad folks are ruining it than acknowledge that the whole place might be rotten. You can get rid of a few bad people.

But, really. Is App Review being torpedoed by secret Android fans on the inside? I think the simpler explanation is probably right. The incentives for App Review being terrible just outweigh the benefits of fixing it. Thirty percent of gambling apps for children, scams, and all of that adds up to a lot.

New Dungeons and Dragons Rules

So Wizards of the Coast released their first play test material for the next version of Dungeons and Dragons. The first batch of stuff is on character backgrounds and races. I think the new rules are overall good. Not perfect, but pretty good. Based on vitriol on the internet, I might be in the minority, but the angry folks are always loudest. A bunch of things, on first glance:

Making ability scores a) tied to background and b) completely flexible negates the mechanical requirement for a bunch of old-fashioned race-stat combinations. Not all elves are slender braniacs, and not all orcs are big brutish tanks. This change felt pretty obvious after Tasha's came out last year, but it's cool to see it codified.

Half-races just being mechanically one or the other is kind of a bummer. But creating a system to pick and choose aspects from two races to combine (like how the half-elf and half-orc already worked, more-or-less) would be really awkward.

Nowhere in any race description does the document even suggest a predetermined alignment. The lone exception may be with Tieflings, with "for better or worse" having ancestry in nasty things, but they explicitly say it has no effect on moral outlook.

The concept of completely customizable backgrounds (with some pre-generated ones if you don't want to) is very good. I basically did that already and reflavored the official ones to get close to what I wanted for a character.

I'm not sure about the Ardlings. They feel like de-buffed Aasimar, which puts them more in line with a celestial-flavored Tiefling. I guess that's the point. I don't care for the animal head thing.

A flat 50 gp budget for equipment feels fair, but for a brand-new player, a "this or that" choice was way easier. That said, your rogue can now buy studded leather, two daggers, and a bag of ball bearings on day one and be pretty much set for the campaign.

Combining Magic Initiate into three lists (Arcane, Divine, Primal) is very good. Especially for the Primal list – it feels like Rangers got some caster representation. But I imagine an Ancients Paladin or a Scout Rogue with appropriate skills, Thorn Whip, and Hunter's Mark would be a better Ranger than most Rangers.

Getting free Inspiration on a 20/d20 is fine, but the DM's I play with aren't stingy with it. One suggestion I saw was to give Inspiration on a 1/d20 instead to even out the bumps, which feels nice.

I have mixed feelings on the "only player characters crit, and then only on weapon dice" thing. Seeing a full-HP low-level character get massive-damage killed in one hit from a crit feels bad, but also makes for good stories. And you never feel more powerful than when you crit-Smite an undead creature as a Paladin. Bursty damage is hard to design a game around, but it's usually pretty fun in practice.

I do not like "all nat 1's are fails" thing at all. If you finagle a +12 or whatever in a skill, you should basically never fail at that skill check.

...

I did not intend on writing that much about Dungeons and Dragons. I guess I have a lot of ideas. But I would never want to actually be in charge of this stuff. There are a lot of angry people on the internet this week who are pretty upset about their play-pretend-with-math game. Some people stuck with 3.5e or 4e, I'm sure some will do the same with 5e.

An Ode to Delta IV Heavy

The final Delta IV Heavy launch from Vandenburg just happened, and it kind of makes me sad to see it wind down – especially when comparing it to SLS. There are plenty of things to complain about relating to SLS, but two stand out when comparing to Delta IV Heavy: the engines and the boosters.

Its main RS-68 engines are derived from the Space Shuttle's main engines, but are intended for a single use, and are simpler and less expensive as a result. The heavy variant has three nearly-identical cores in the first stage, with the outer two serving as boosters. The RS-68 sheds much of the complexity of the RS-25's by cooling the nozzle ablatively, which you can do when you only need to use it once.

Compare that to SLS. If it ever gets off the ground, it will result in four flight-proven, re-usable RS-25 SSME's being thrown into the ocean as garbage. An earlier iteration of the Shuttle-derived booster concept did use a few upgraded RS-68's, but they decided the engineering required to manage the interactions of the RS-68's ablative nozzles with the solid boosters was too difficult to overcome.

And yeah, the boosters. SLS uses Shuttle-derived solid rocket boosters. I think this is really bad for everyone that doesn't work for Northrop-Grumman. Humans should never fly on a rocket that uses solids as part of its primary propulsion. Obviously, there's Challenger. That failure was predicted and ignored by managers suffering from go-fever. But in the leadup to SLS's predecessor program, the Air Force determined that in some phases of flight, a solid rocket failing would have a 100 percent chance of killing the crew due to the escaping capsule's flight through a shower of burning fuel. Watching a capsule full of crew successfully escape a failing rocket only to lose its parachutes to burning debris would be heartbreaking.

Delta IV Heavy doesn't use solids for primary propulsion. A failing side- or core booster would be really bad, of course. But it would probably result in an explosion that isn't followed by a persistent fire. By the time an escaping crew came back down through the altitude where the failure happened, the propellant would already be done burning.

Okay, so how about the upper stages? I'd compare them, but SLS uses Delta IV's second stage in an almost unchanged state. Of course there are plans to upgrade SLS's upper stages in the future. But that requires it to fly more than once or twice. We'll see.

Crew-rating the Delta IV Heavy would take some effort. NASA did a study (pdf) early in the Constellation days and said it could be done. It would require some new software, avionics, and process changes in manufacturing. The biggest change would be to modify the second stage. Here's the thing – that modified second stage could be done, because that's what the SLS second stage is. Although I'm not sure how I feel about Boeing's ability to safely implement hardware and software changes without cutting corners.

In the end, I'd feel a lot better about a crew-rated Delta IV Heavy than SLS. Sure, it's expensive, something like $250 million a pop. But SLS is like eight times that. And you have the solid failure debris problem, and the bad feeling of throwing SSME's into the ocean. And while I think the safest crew-rated rocket on the planet right now is Falcon 9, I'd much rather climb onto a Delta IV Heavy than SLS.

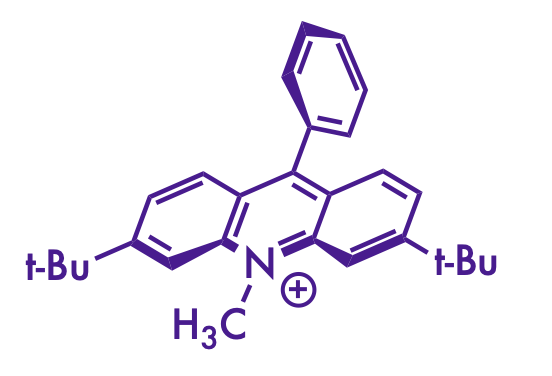

Really Nice Chemdraws

I really enjoy the figures that the Nicewicz lab produces for their publications. Scrolling through this page, it's clear that there's a distinct style there that I don't see in other research groups. And you don't see them in the MacMillian or Johnson group publications, so he didn't pick it up from a former PI.

If you scroll down far enough, you can see that most of it is there from the beginning. But the font took a while to show up (the 2015 JACS paper at 18 doesn't have it, but the Science paper at 19 does). There's a transition period for the font, then it's really consistent onward.

There's probably some subtle factors that I'm not seeing, but to my eye, there are a few things that make the Nicewicz lab figures stand out:

- the font face and relative thinness compared to the bonds

- liberal use of stereochemical wedges and dashes (especially on the acridinium catalysts)

- a consistent use of specific red and blue colors for emphasis

I think I have some ChemDraw settings that more-or-less replicate the Nicewicz style as much as I can manage. The version of the table of contents graphic for this paper on the Nicewicz website includes some color swatches that the one on the Synlett site doesn't, and that helped a lot to nail down the colors.

- Futura Medium, size 10

- 0.016 inch line width

- 0.032 inch bold width

- 0.2 inch fixed bond length

- Red color: 186, 6, 6 rgb (#BA0606 hex)

- Dark blue: 55, 6, 123 rgb (#37067B hex)

- Light blue: 118, 146, 183 rgb (#7692B7 hex)

(my attempt at a Nicewicz-style drawing)

Although I still can't figure out how to get the acridinium catalyst to look right. Bolding the correct bonds and wrangling it into the right orientation with the Structure Perspective tool in ChemDraw gets it close, but the double bonds get a little squished on some of them. And I still think the font isn't quite right. Maybe there's a Futura Light that they use that I don't have installed.

In any case, I wish I could make figures that look as good as Nicewicz's without obviously copying the style. Mine aren't too bad, I guess. At least I'm not [putting] [every] [word] [in] [brackets].

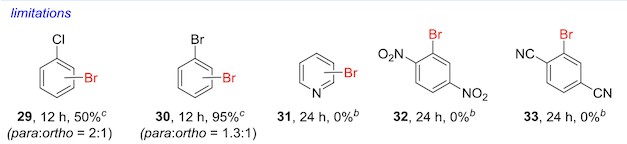

Limitations

Really cool paper out in JACS yesterday detailing an electrophilic aromatic substitution reaction that works on really electron-deficient rings. With some cheap pool chemicals, a pretty simple benzenesulfonic acid catalyst, and the world's most magical solvent, you can stick a bromine on some pretty tough rings.

But as interesting as that is, my favorite part of the paper is the portions of the figures that list the method's limitations.

There's an obvious incentive in a methods paper like this to market the method in as positive a light as possible. A huge percentage of papers probably leave out results that didn't turn out well because it makes the method look weaker. But when you do see this sort of thing, it's on really high-quality papers – and it's pretty likely that you can trust the method to work on the first shot.

The Beginning of NSP 3

I've begun the great migration of NMR Solvent Peaks to SwiftUI.

I have looked into porting the iOS / iPadOS version a few times, and always hit hard walls. Not being to place certain views where I wanted was the primary problem, but there were others. But three things have changed:

- A redesign of the main interface renders many of the issues I had before moot

- I've worked around some of the SwiftUI problems to put things where I want them

- The rest of the problems aren't bad enough to warrant a workaround (read: a pile of hacks)

To my surprise, I could have done this all last year, since none of what I've written (so far) requires the iOS 16 improvements to SwiftUI. The main thing motivating me is the message from the WWDC '22 Platforms State of the Union: AppKit and UIKit will be around for a while, but they're old news.

I'm not sure it's the same sort of transition as Carbon to Cocoa back in the early Mac OS X days. Carbon absolutely went away for good in Snow Leopard, but SwiftUI "compiles down" to AppKit and UIKit components in the background. The only way those frameworks are going away is if SwiftUI actually runs natively on their platform, and I don't think that's happening any time soon.

I'm only partway through the porting process. But the underlying model code doesn't need to change at all (it's still Swift, after all). Porting over the multiplet drawing code wasn't as hard as I thought it would be. And the overall design for the main interface is starting to come together. In the next few weeks, I'll see if I can get it across the finish line. Hopefully it'll be ready when iOS 16 drops in September or October.

Re-Listening to Podcasts from the Dark Times

A good chunk of my job involves standing at a fume hood, mostly by myself, and doing stuff with my hands. Early on in grad school I started listening to podcasts, and they're great for when you're doing extended solo work.

I kind of only listen to two types of podcasts. There's the "two or three friends just talk about stuff" type and the "one person tells you about historical stuff" type. Sometimes the first type has a vague outline but it's better as it becomes more freeform, because the show is really more about the people than the topics. The second type is effectively a history audiobook that is released a chapter at at time. I know I'm weird, because the more popular interview shows and the murder documentaries and public radio essays don't really do it for me.

There aren't really even that many examples of either type that I actually enjoy, so I end up listening back through favorites. Usually this entails downloading episode 1 and just going through it in chronological order. I'm currently going through a Roderick on the Line re-listen, which is a perfect example of the "just some friends talking about stuff" type.

Podcasts are especially nice when the world is terrible and you just want to hear cool people talking about cool stuff. The problem with the more free-form types, though, is when you get to certain points in time. Say, November of 2016, when a little over 50% of Americans just sort of had to sit and blink, or panic, or plan a move to Canada. If I'm doing a podcast re-listen, I sort of have to skip a few months.

The other, obviously, is around mid-March 2020. We all had to start taking the wrong kind of vacation. It's pretty wild to think how (most of us) reacted to a few cases here or there. Compare that to the current, "who cares lol" wave of mid-2022, where there are something like an order and a half magnitude more cases than early 2020 despite a decent vaccination campaign (at least in California) but many people seem to think that everything's fine. But I digress.

It's been more than two years, so the panic at the start of the current unpleasantness is kind of history now. But it's hard to listen to people say "well, we'll get through this eventually" because we really haven't yet.

So, maybe two periods of podcast re-listening time to skip now.

Models and Malarky

Every chemical engineer loves a good computational model. You have a reaction stream, you add some stuff, and would you look at that, there will be a minor exotherm. You'd better cool it down a bit before adding the stuff and be sure to add it slowly. That'll add some capex and time costs, but that's what the budget is for.

Models are great. But they usually need verification in the real world.

I've been called a radical empiricist for this view (not that kind, or that kind). But I don't think it's unreasonable. Calculated models are not the answer. They're the question.

This came up at work lately with a predicted exotherm on mixing two solutions. The modeling software spat out a nearly 20 °C exotherm, which was ridiculous given the components. The modelers asked for help, I measured it in the lab, and it was actually a 2 ° endotherm in a flask. The hunt for software bugs begins.

But a lot of times, when it's more subtle, that kind of result is overlooked or ignored. At best, you end up over-provisioning your equipment and wasting your budget. Or maybe your timeline gets pushed back because you have to source a beefier part. But at worst, well, kaboom.

It's the same problem as with organic reaction mechanisms. Nature is complex. We can't yet accurately simulate every bit of the universe down to the boson and quark or whatever. So we compromise and make models. Usually they're pretty good. Usually.

So verify your models. Maybe it's my bias as a process chemist, but at the end of the day, it's my job to make sure product gets into the drum. When the equipment is designed and built wrong because the model said it was fine, it makes that job a lot harder.

Why Sparteine?

I always scratch my head when papers like this one show up in the ASAPs. Sparteine is a weird chiral ligand. The (+) version is comparatively inexpensive ($18/gram right now at Oakwood if you buy 10 grams), but the (-) version is infamously expensive or impossible to source ($595/gram right now at Oakwood). The paper in question only mentions the (+) version, so if you want the other enantiomer, you'll need to shell out some cash or find another ligand system. I can't help but think there had to be a more flexible chiral amine scaffold to go after.

Painful Retractions

Two pretty rough retractions today in JACS (1, 2). Same group, same authors list, same story - raw NMRs and HPLC traces were edited or fabricated.

It's difficult to figure out how to feel about these thigs. At first, you feel kind of bad for the professor here. Their grad student faked data and now their name has an asterisk beside it.

But it's also not very hard to imagine a lab culture where the only acceptable results are good results, and not getting the right product or a good ee or a high yield is unacceptable. Professors have been known to demand results in failing projects before letting students graduate.

Not every case of data fabrication is a result of a desk-pounding PI. This one probably isn't given the level of investigation that seems to have happened before retraction.

But I bet a lot of them are.