NMR Tube Carriers

I really enjoy designing 3D models, and I really don't like filling out near-miss paperwork.

I've been designing and re-designing NMR tube carriers for literal years now, and I think I finally have a set that I like. Check them out on Printables.

At my last job, there was a strict rule about carrying NMR tubes around in mixed-use hallways. If you dropped one and it shattered, or it broke in your pocket, you'd have a potentially-flammable, potentially-toxic spill in an area where people don't wear gloves or goggles. It's a small risk, in the end, but no safety person ever got fired for suggesting an annoying fix to a minor safety concern. And they will scold you while you fill out a pile of paperwork.

I didn't like the carriers that work supplied – mostly that they only held one tube. So I designed and printed a carrier that held multiple tubes. That brought me into my favorite part of 3D printing: the loop of designing, printing, evaluating, and redesigning. It took a while, but I think they're ready.

At my current job, we have a group that routinely brings 15+ tubes down to the NMR lab at a time. So, I designed a huge one that carries 19 of them in a nice, space-efficient hex pattern. I could go up by one more 'ring' of tubes, but I think it would take half a kilo of filament to print. The single-tube carrier is probably what everyone needs. A 3-tube 'small' version is an upgrade. I use the 7-tube 'medium' version, but I'll never fill it up in one trip. And the enormous 19-tube carrier is enough even for the edge-case users.

It's really fun to build cool stuff and put it out into the world.

Five and a Half Years

Things have been going, on the whole, pretty well.

After getting laid off from my job in Indianapolis, I bid the Midwest a fond farewell to take a position out in the Los Angeles area. It was an adjustment, but things are okay out here. The wife and I might be the only people in the area watching Indy Eleven games from our couch, and I'm never going to accept the awful no-cold-snap corn they have here, and I'm going to miss being able to grab cheap seats ten minutes before kickoff and walk to a Colts game on a whim. And all of that, of course, is nothing compared to being so far away from friends again. I miss those people. (And, in one case, their baby!)

On the work front, though, things are going fantastically. I like to joke that I'm the head of process chemistry. Realistically, I'm just the first scale-up chemist the startup hired, but it's great to be The Expert in something like that in a team of medicinal chemists. And while I was icked out by the "AI"-ness of it all on their homepage at first, during interviews it became apparent that this is not a company where they just ask an LLM how to do chemistry – instead, they generate a bazillion data points with cool robots and combinitorial chemistry, then train ML models, and use that info to design another round of experiments. I don't really interact with that workflow directly, but it's fun to see from the sidelines. It's been about five months since I started and I've never felt so valued as an individual contributor and also given as much responsibility to manage big projects while also recognizing that I'm a real person. And my coworkers are awesome. It's a mostly younger-leaning team, and even the dude-iest AI dudebros love to dunk on the hucksters who claim their plaigarism machines can think.

About a week and a half ago, it appears that I brought something home from work that I had successfully avoided for more than half a decade.

Covid is not fun. My brain was scrambled eggs there for a couple of days. I'm not sure when I'm going to clear this cough, and I'll be working from home until I have consistent good days. Worst of all, I only had one test at first, and it came back negative early on – and so instead of being completely paranoid about locking myself in a room and avoiding giving it to the asthmatic wife, I passed the baton. So as I recover, I get to take care of her like she took care of me. It will have been a very unpleasant couple of weeks. I'm just relieved that my job's policy is "stay away until you are no longer sick, because duh, and we do not keep track of sick days." Beats the heck out of the policy my job had in 2020: "we make pharmaceuticals, so you are an expendable essential worker, and also we won't enforce any mask mandate, and also you get to work outside in the smoke and 110° heat doing 60+ hour weeks." Not that I'm still upset at that situation.

But I had really held a lot of pride that I never got it. I know it was somewhat luck of the draw, but I put my thumb on the scale where I could. I was the last one at my job in like 2022 still masking up all day, but once we were a few years into the vaccination campaigns I figured I was being overly cautious. I still (and can't imagine a future where I won't) put on an N95 for public transit, espeically flights. I had my appointment set up for my 2025-6 shot, although that's going to probalby have to be delayed. It was just really surreal seeing that second line on the test show up for the first time ever.

It seems like as the years go on and evolution does its thing, Covid continues to get less leathal and more contagious. In the long run, I was bound to get it eventually. Doesn't make it suck any less, though. And at the rate the current brain-worm-addled government is doing damage, I think the already-slim chances that we'll ever be done with Covid have been reduced to zero.

Something Funky is Happening in Stockholm

The 2024 Nobel Prizes were announced a few weeks back. I think the folks who decide who becomes a laureate need to have their heads checked.

I have gripes about the chemistry prize. But I usually do. By my count, of the 20 prizes awarded since I took my first chemistry course as a sophomore in high school, 11 have been for something other than chemistry. That thing is biology.

Okay, so maybe click chemistry is chemistry. Even though the real innovation was being able to attach stuff to DNA or whatever in cells. But conservatively, 50+% of the Nobel Prizes in Chemistry in the last 20 years have gone to people doing biology, analytical techniques for biology, or chemical tools specifically for use in biology. And hey, guess what. There’s a prize for that: Physiology or Medicine. Which, since 2005, has gone to biologists doing work in things like RNA and protein interactions and stuff several times.

But the physics prize this year takes the cake. They gave it to the AI guys.

Not particles, or waves, or stars and planets, or materials. Nope. They gave it to the guys who invented algorithms that led to ways of turning massive copyright theft into energy guzzling slop factories. You can generate a lewd image of your favorite anime character with improbable anatomy. You can generate paragraphs of text to email to everyone in your department that nobody will read. You can work on a way to do "full self driving" with cheap hardware, and someone randomly dies only once in a while. All it takes is putting artists out of work, putting carbon into the atmosphere like you’re trying to win a diesel truck idling contest, and sending GPU prices into the stratosphere.

Three years ago they gave it to the people modeling global warming. Now, they’re endorsing the guys saying climate change targets are unreachable because you have to burn a lot of energy to run a chat bot that lies.

When this sort of thing became available to the public, I played around with it for a bit. But it didn’t really make anything useful unless I let my Mac crank for an hour at full blast, and even then I had to pick one okay image out of a pile of slop. And then people I know started getting let go of their writing jobs. Some were offered new jobs, at lower wages, to edit the bot’s output. That put an end to any flirtation I had with the garbage factory.

This weekend, I got to review a paper for OPRD. The abstract, introduction section, and conclusions were (as far as I can tell) written entirely with LLM tools. Complete with misattributed citations and, in one instance, a citation to an article that did not even exist. I'm guessing it took me a lot longer to review that piece of crap than it took the LLM to generate it.

Pretty soon, it’s gonna be on my phone. iOS 18.1 dropped this week. Generating bad caricatures of my friends. Writing emails to people that won’t read them and instead autoreply with their own bot. Making new emojis, I guess. For what reason? Supposedly “everyone” knows it’s the new hotness. Nobody wants to be perceived as being behind. Even if they don’t understand it, they have to pretend to. Or at least be enthusiastic, so dumb guys with money will invest.

I think the folks in Copenhagen got caught up in the “don’t be left behind” hype cycle.

The prizes are a way to say “this is important.” For chemistry, usually that means it was invented a while ago and now plays an important role. Physics tends to be more recent. Physiology or Medicine varies, sometimes it’s older but often it’s more like Time appointing a Medicine of the Year. Literature is something I’ll pass on commenting on too much, but I know they gave it to Bob Dylan a few years back and I’ll always think that was hilarious.

So maybe the physics prize committee members thought well, this is our chance to confirm that this prize is meaningful. We can give it to the AI guys. Everyone knows it’s important. Maybe it’s not physics. Who cares?

The thing is, when you start applying enough energy to a biological system, it stops being biology and starts being physics. All the energy being poured into AI data centers is gonna result in carbon emissions that speed that process up for us. Maybe we’ll get blown away by hurricanes and tornadoes, flooded, or burned earlier than we otherwise would have. At least we had anime boobies to look at. And the unemployed time to look at them.

The Right Kind of Retraction

A real bummer of a retraction in JACS last week, but it's an example of the scientific process doing what it's supposed to do.

The researchers had found a cool optical effect where a particular absorption band in a dye molecule got redshifted when you squeeze it between two plates spaced very, very close together (like microns). This effect also seems to affect things like reaction rates and selectivity and other sorts of things that, if you wanted to, could result in some very fancy reactions. But we, as a community, don't really have a super good grasp of why what's happening is happening. Noticeing a redshift in that dye's absorption band led the researchers to suggeest that the oddball chemistry happening in those tiny spaces are due to through-space electronic interactions. (If I'm reading their paper correctly, that is. I'm no spectroscopist.)

But, then, about a year and a half later, the team had been moving forward with the next set of experiments, and found that the effect they had seen was due to an optical artifact. That artifact was also present in their control experiments, making it harder to catch. Because they couldn't differentiate between the effects of those reflections and the effect they had proposed, they retracted the article.

It's really never fun to have to issue a correction or a retraction. But it appears that this one was due to the scientific process doing what it's designed to do: discover, replicate, and extend. If you're a new grad student in a lab, it's pretty typical to join a project and start by replicating the results described in a previous paper from the group. As a grad student, it gets you familiar with the equipment and process while having a good metric of whether or not you're ready to start doing new stuff. But as a PI, it gets more eyeballs on results. In the worst case, a new student can't replicate a result because a former student fabricated it. But in this case, it appears as though fresh eyes found an artifact that had gone unnoticed until then, and the authors of the paper did the right thing by retracting it.

I don't read a lot of the hardcore spectroscopy literature. But if I did, after this retraction, I'd be willing to trust the expertise of the lead auther and his lab more, not less.

The Lesson of Water-Glycol Chillers

Roughly every eighteen months, I have to re-learn this lesson. I'm gonna write it down this time. Maybe that'll help me remember.

Reactor chillers are really cool. You take a jacketed flask or reactor, hook it up, put some heat transfer fluid in the chiller, and turn it on. You can control the temperature of the jacket, and then the contents of the reactor, using the chiller. The fancy ones can do temperature programs and ramps and stuff if you're doing crystallization work. No oil baths, no dry ice, no heating mantles. They're awesome.

That heat transfer fluid, though. My favorite is syltherm, which is a polysiloxane material. You can get it really hot and really cold, at least in the range that organic chemistry is typically performed. But it burns, which isn't great. For very cold systems, like cryogenic condensers for vacuum distillations, one of my coworkers at my first process job introduced me to something really simple: ethanol. Very low viscosity compared to the other options when you're in cryogenic conditions. Watch out for the vapors, though. Again, the burning.

But the most commonly used heat transfer fluid is a water / glycol mixture. I've seen both 50:50 water : ethylene glycol and : propylene glycol as pretty common options. You can get them reasonably warm and reasonably cold. It's about as cheap as you can get for something like this. No big toxicity issues. And with all that water, flammability shouldn't be a problem.

But.

You have to use your brain. When it's heated up, or sits there in the chiller for a while, that water has an appreciable vapor pressure. But the glycol doesn't. So one day, you open up the tank and notice it's low. You grab your pre-made bottle of 50:50 and dump some in. Congratulations, your 50:50 mix is now like 40:60 water : glycol. Next time, it's 30:70. And soon, because it doesn't take much, you have a very viscous mixture that stops flowing very well. Your pump says it's at -5 °C, but the fluid isn't making it to your condenser fast enough.

And if you're me, today, you suddenly remember all of this when the DCM you're trying to distill starts to fly right past your condenser.

Sigh. This time I'll remember. Certainly. Just add water to the chiller.

Discovery with a Computer Isn’t Discovery

I've talked about this sort of thing before, but here we go again.

Computational models in chemistry are cool and useful. They predict and explain things in a way that's difficult or impossible to do at the bench. But I take issue with the title of this recent paper in JACS: "Computational Discovery of Stable Metal–Organic Frameworks for Methane-to-Methanol Catalysis" (emphasis mine). The authors have done no such thing.

This paper describes a workflow where a database of MOFs is mined for this-and-that feature, and computational methods are used to predict which ones would be good catalysts. Some of them are probably good catalysts, according to their DFT models. Discovery!

Except they didn't actually do anything. There are no turnover numbers or yields or anything like that becausd they didn't run any reactions. They didn't find a collaborator to run any reactions. They suggest in the manuscript that, well, someone should try these things. But it seems like they consider the matter closed because their models say it should work. The folks at JACS agree, I guess.

That isn't a discovery. It's not even a result. It's a hypothesis. One that needs to be tested before anyone can claim a discovery.

It's especially frustrating to compare it to another paper in the same batch of ASAPs. In that one, the authors look at crystal structures and try to find something that will do the reaction they want. But of course, they designed a bunch and ran them to see which worked best. I'm sure their models told them which one would work best. But then they went and did the thing. Made them and tested them. Optimized them based on results. And in the end, isolated 35 mg at 93% yield, 99:1 dr, 3.5:96.5 er, and 5000 catalyst turnovers. Now that is some science.

Modeled, predicted results aren't results. They're hypotheses.

Attention Grabbing TOCs

Sometimes a header graphic comes up in the ASAPs and I have to shake my head a bit. Like earlier this week, when someone wrote out their N-dealkylation catalyst as (Ir[dF(CF3ppy]2(dtbpy))PF6 instead of just drawing the thing out. It's ChemDraw, not ChemWrite, you know.

But man, one that popped up today was great. High-energy compound chemists are something else. And apparently, their graphics concepts are hard to beat:

Striking.

A Necessary Addition to the Style Guide

Drawings of chemical structures are one of the most important ways (especially organic) chemists convey information. And while everyone has their style, there are certain conventions that are universal.

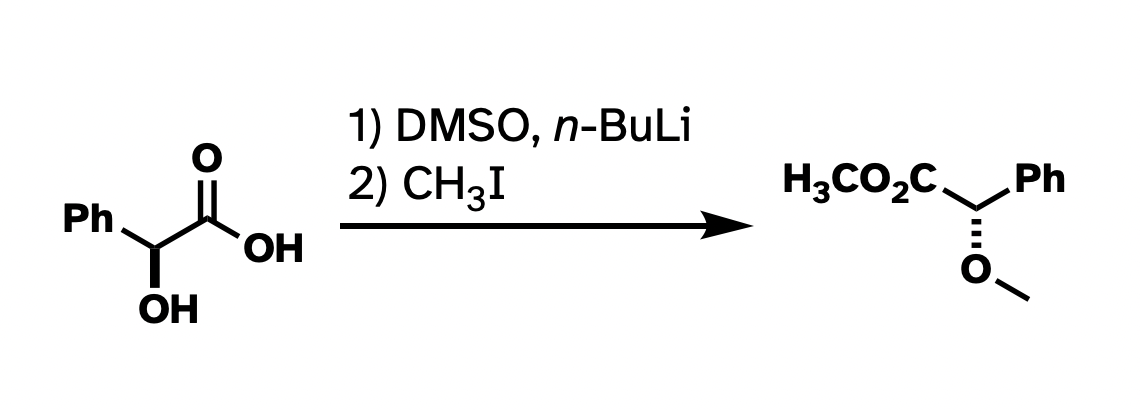

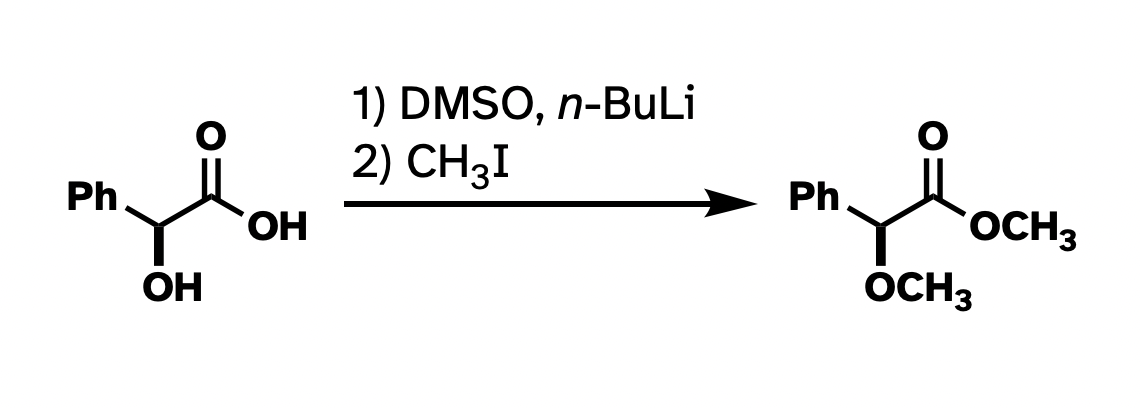

And while I think that nitpicking Chemdraws in presentations is not always constructive, when it comes to publishing, I think there is usefulness in having a style guide. At the very least, drawn structures that are consistent within a paper make taking in the information frictionless. Consistency within a single figure, I think, is even more important. Take this reaction that I ran a bunch of times in graduate school:

This drawing isn't technically wrong. But I've rotated the whole thing around. I've drawn methyl groups two different ways. I also abbreviated the carboxylate in the product but not in the starting material. It would be a lot more clear what's going on if I had "locked in" as much of the structure as possible so the most obvious things to stick out were the changes, and if the abbreviations were consistent.

Better, right?

This argument has little to do with the asthetics of the above structures. For instance, I really don't like "hanging stick" methyls, like on the ether in the first drawing. But many use it, and it's not really wrong. The actual problem here is clarity and consistency. There wasn't a reagent that looks like "–– I" in the starting materials, so why put it in the product? And there's one "H3C" in the product that works all right, so why not be consistent with the methyls? The point of these drawings is for the easy conveyance of information. I think the top drawing does not do a very good job at that.

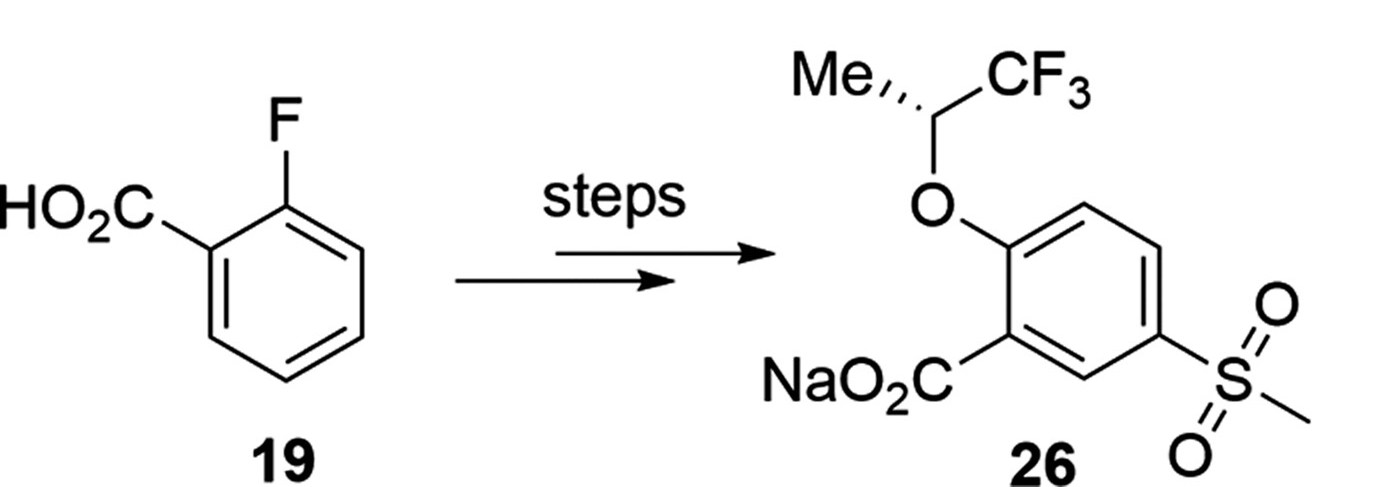

This brings me to a paper that dropped in OPRD today. It's a good paper, with great chemistry, and I'm not even going to link to it because this criticism isn't for the authors – it's for everyone that draws structures and puts them on (virtual) paper. But look at this little slice of the header graphic:

If I were to re-draw this, I'd rotate the starting material 60° counterclockwise to show that the starting carboxylic acid hasn't changed (besides being made into a sodium salt). That change makes it immediately clear what's happening here – an SNAr followed by some sort of electrophilic sulfonation reaction. Also, they've abbreviated a methyl two different ways – as "Me" and as a hanging stick. Not so bad, really, but it feels like two different teams worked on both halves of this molecule.

Again, this isn't an argument about what looks nice. We can argue about what looks nice, and nice looking Chemdraws look different to different people. This is about clarity and ease of information transfer. Keep as much about your starting materials and products unchanged as is practical, and let the changes stand out.

Really Nice Chemdraws

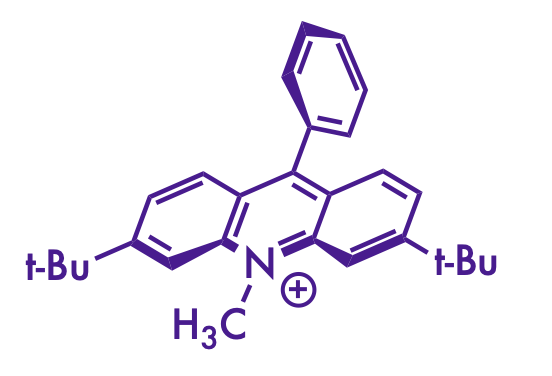

I really enjoy the figures that the Nicewicz lab produces for their publications. Scrolling through this page, it's clear that there's a distinct style there that I don't see in other research groups. And you don't see them in the MacMillian or Johnson group publications, so he didn't pick it up from a former PI.

If you scroll down far enough, you can see that most of it is there from the beginning. But the font took a while to show up (the 2015 JACS paper at 18 doesn't have it, but the Science paper at 19 does). There's a transition period for the font, then it's really consistent onward.

There's probably some subtle factors that I'm not seeing, but to my eye, there are a few things that make the Nicewicz lab figures stand out:

- the font face and relative thinness compared to the bonds

- liberal use of stereochemical wedges and dashes (especially on the acridinium catalysts)

- a consistent use of specific red and blue colors for emphasis

I think I have some ChemDraw settings that more-or-less replicate the Nicewicz style as much as I can manage. The version of the table of contents graphic for this paper on the Nicewicz website includes some color swatches that the one on the Synlett site doesn't, and that helped a lot to nail down the colors.

- Futura Medium, size 10

- 0.016 inch line width

- 0.032 inch bold width

- 0.2 inch fixed bond length

- Red color: 186, 6, 6 rgb (#BA0606 hex)

- Dark blue: 55, 6, 123 rgb (#37067B hex)

- Light blue: 118, 146, 183 rgb (#7692B7 hex)

(my attempt at a Nicewicz-style drawing)

Although I still can't figure out how to get the acridinium catalyst to look right. Bolding the correct bonds and wrangling it into the right orientation with the Structure Perspective tool in ChemDraw gets it close, but the double bonds get a little squished on some of them. And I still think the font isn't quite right. Maybe there's a Futura Light that they use that I don't have installed.

In any case, I wish I could make figures that look as good as Nicewicz's without obviously copying the style. Mine aren't too bad, I guess. At least I'm not [putting] [every] [word] [in] [brackets].

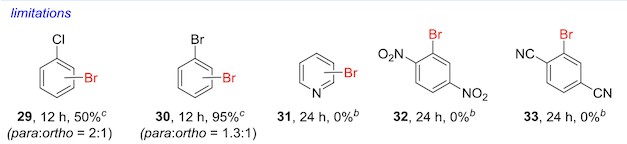

Limitations

Really cool paper out in JACS yesterday detailing an electrophilic aromatic substitution reaction that works on really electron-deficient rings. With some cheap pool chemicals, a pretty simple benzenesulfonic acid catalyst, and the world's most magical solvent, you can stick a bromine on some pretty tough rings.

But as interesting as that is, my favorite part of the paper is the portions of the figures that list the method's limitations.

There's an obvious incentive in a methods paper like this to market the method in as positive a light as possible. A huge percentage of papers probably leave out results that didn't turn out well because it makes the method look weaker. But when you do see this sort of thing, it's on really high-quality papers – and it's pretty likely that you can trust the method to work on the first shot.

Models and Malarky

Every chemical engineer loves a good computational model. You have a reaction stream, you add some stuff, and would you look at that, there will be a minor exotherm. You'd better cool it down a bit before adding the stuff and be sure to add it slowly. That'll add some capex and time costs, but that's what the budget is for.

Models are great. But they usually need verification in the real world.

I've been called a radical empiricist for this view (not that kind, or that kind). But I don't think it's unreasonable. Calculated models are not the answer. They're the question.

This came up at work lately with a predicted exotherm on mixing two solutions. The modeling software spat out a nearly 20 °C exotherm, which was ridiculous given the components. The modelers asked for help, I measured it in the lab, and it was actually a 2 ° endotherm in a flask. The hunt for software bugs begins.

But a lot of times, when it's more subtle, that kind of result is overlooked or ignored. At best, you end up over-provisioning your equipment and wasting your budget. Or maybe your timeline gets pushed back because you have to source a beefier part. But at worst, well, kaboom.

It's the same problem as with organic reaction mechanisms. Nature is complex. We can't yet accurately simulate every bit of the universe down to the boson and quark or whatever. So we compromise and make models. Usually they're pretty good. Usually.

So verify your models. Maybe it's my bias as a process chemist, but at the end of the day, it's my job to make sure product gets into the drum. When the equipment is designed and built wrong because the model said it was fine, it makes that job a lot harder.

Why Sparteine?

I always scratch my head when papers like this one show up in the ASAPs. Sparteine is a weird chiral ligand. The (+) version is comparatively inexpensive ($18/gram right now at Oakwood if you buy 10 grams), but the (-) version is infamously expensive or impossible to source ($595/gram right now at Oakwood). The paper in question only mentions the (+) version, so if you want the other enantiomer, you'll need to shell out some cash or find another ligand system. I can't help but think there had to be a more flexible chiral amine scaffold to go after.

Painful Retractions

Two pretty rough retractions today in JACS (1, 2). Same group, same authors list, same story - raw NMRs and HPLC traces were edited or fabricated.

It's difficult to figure out how to feel about these thigs. At first, you feel kind of bad for the professor here. Their grad student faked data and now their name has an asterisk beside it.

But it's also not very hard to imagine a lab culture where the only acceptable results are good results, and not getting the right product or a good ee or a high yield is unacceptable. Professors have been known to demand results in failing projects before letting students graduate.

Not every case of data fabrication is a result of a desk-pounding PI. This one probably isn't given the level of investigation that seems to have happened before retraction.

But I bet a lot of them are.